AI and its (mis)uses in medical research and practice

Infection and Immunity spring meeting

Department of Data Science Methods, Julius Center, University Medical Center Utrecht

2024-04-18

What is AI?

What is AI?

What is artificial intelligence?

computers doing tasks that normally require intelligence 1

What is artificial general intelligence?

General purpose AI that performs a range of tasks in different domains like humans

AI subsumes rule-based systems and machine learning

- Rule-based AI: knowledge base of rules

- Machine learning: statistical learning from examples #- (traditional) machine learning (logistic regression, SVM, RF, #GBM) #- modern machine learning: deep learning and foundation models

Rule-based systems are AI

- rule: all cows are animals

- observation: this is a cow \(\to\) it is an animal

- applications:

- medication interaction checkers

- bedside patient monitors

machine learning: statistical learning from examples

ML tasks

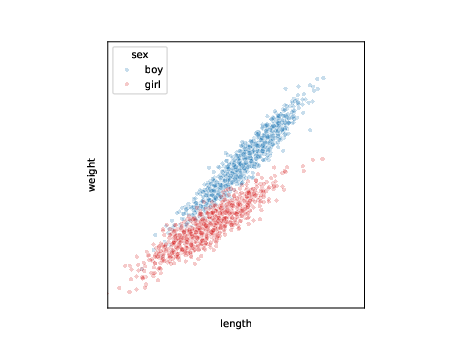

data:

| i | length | weight | sex |

|---|---|---|---|

| 1 | 137 | 30 | boy |

| 2 | 122 | 24 | girl |

| 3 | 101 | 18 | girl |

| … | … | … | … |

\[l_i,w_i,s_i \sim p(l,w,s)\]

ML tasks: generation

use samples to learn model \(p_{\theta}\) for joint distribution \(p\) \[ l_j,w_j,s_j \sim p_{\theta}(l,w,s) \]

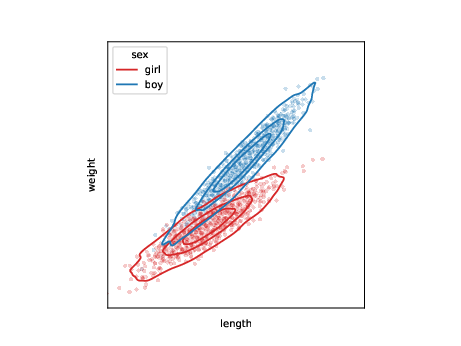

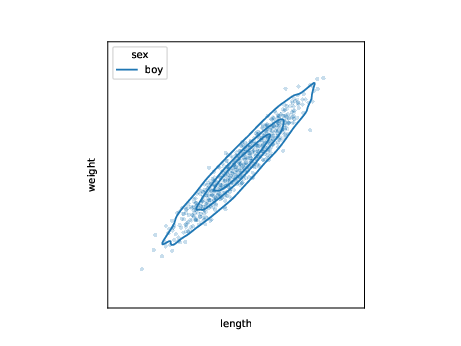

ML tasks: conditional generation

use samples to learn model for conditional distribution \(p\) \[ l_j,w_j \sim p_{\theta}(l,w|s=\text{boy}) \]

| task | |

|---|---|

| generation | \(l_j,w_j,s_j \sim p_{\theta}(l,w,s)\) |

ML tasks: conditional generation 2

use samples to learn model for conditional distribution \(p\) of one variable \[ s_j \sim p_{\theta}(s|l=l',w=w') \]

| task | |

|---|---|

| generation | \(l_j,w_j,s_j \sim p_{\theta}(l,w,s)\) |

| conditional generation | \(l_j,w_j \sim p_{\theta}(l,w|s=\text{boy})\) |

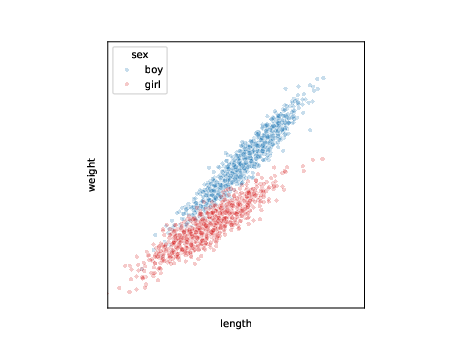

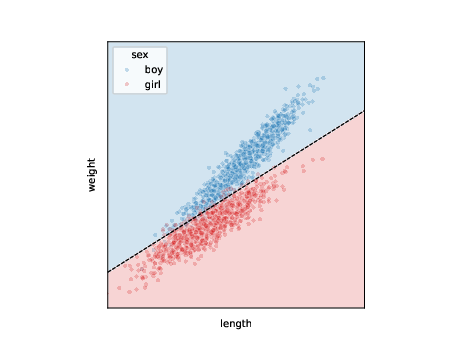

ML tasks: discrimination

call this one variable outcome and classify when expected value passes threshold (e.g. 0.5): \[ s_j = p_{\theta}(s|l=l',w=w') > 0.5 \]

| task | |

|---|---|

| generation | \(l_j,w_j,s_j \sim p_{\theta}(l,w,s)\) |

| conditional generation | \(l_j,w_j \sim p_{\theta}(l,w|s=\text{boy})\) |

| discrimination | \(p_{\theta}(s|l=l_i,w=w_i) > 0.5\) |

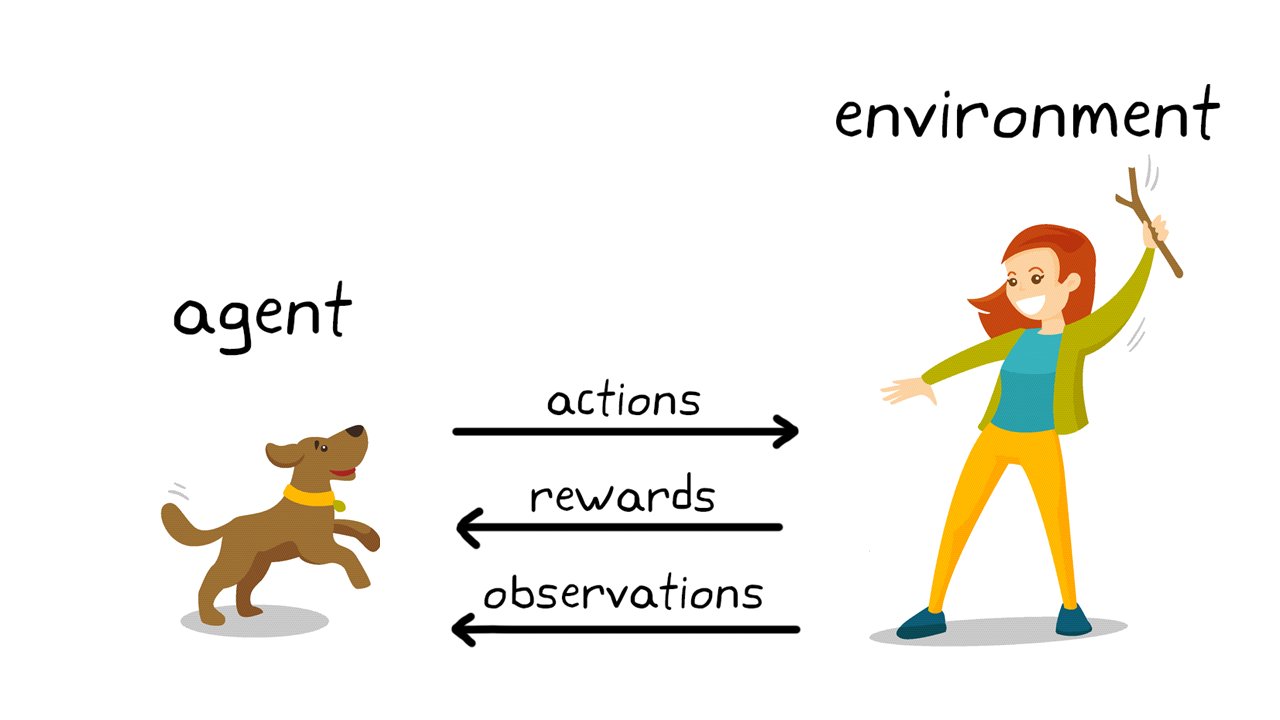

ML tasks: reinforcement learning

- e.g. computers playing games

- maybe not so useful for clinical research as requires many experiments

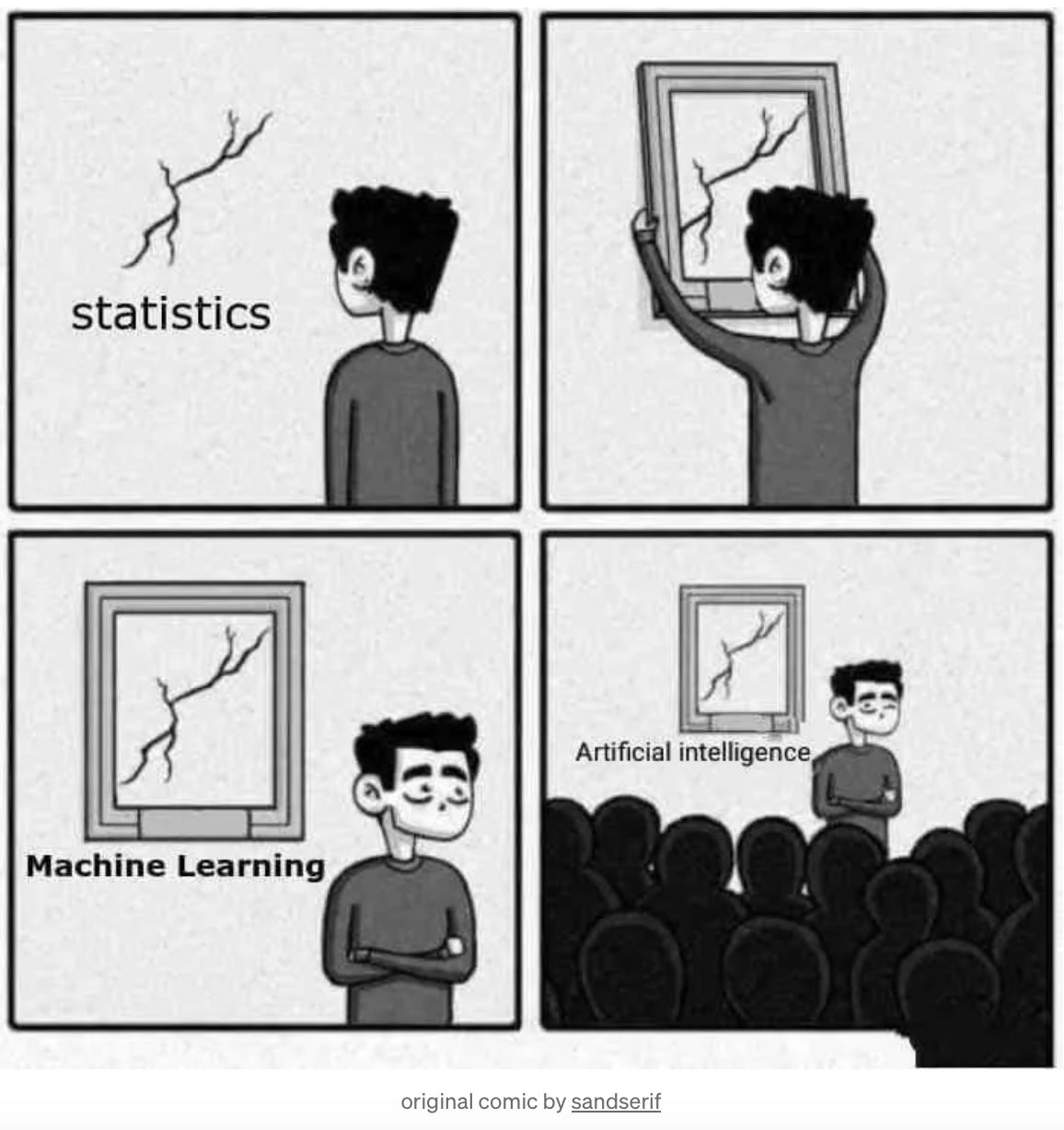

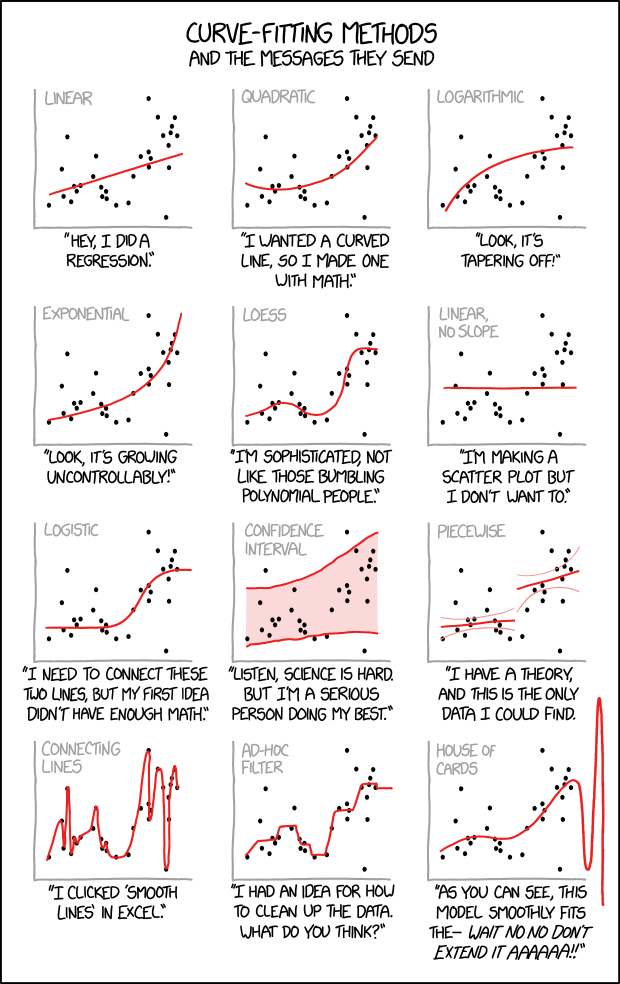

Machine learning is statistical learning with flexible models

- There is no fundamental difference between statistics and machine learning

- both optimize parameters to improve some criterion (loss / likelihood) that measures model fit to data

- models used in machine learning are more flexible

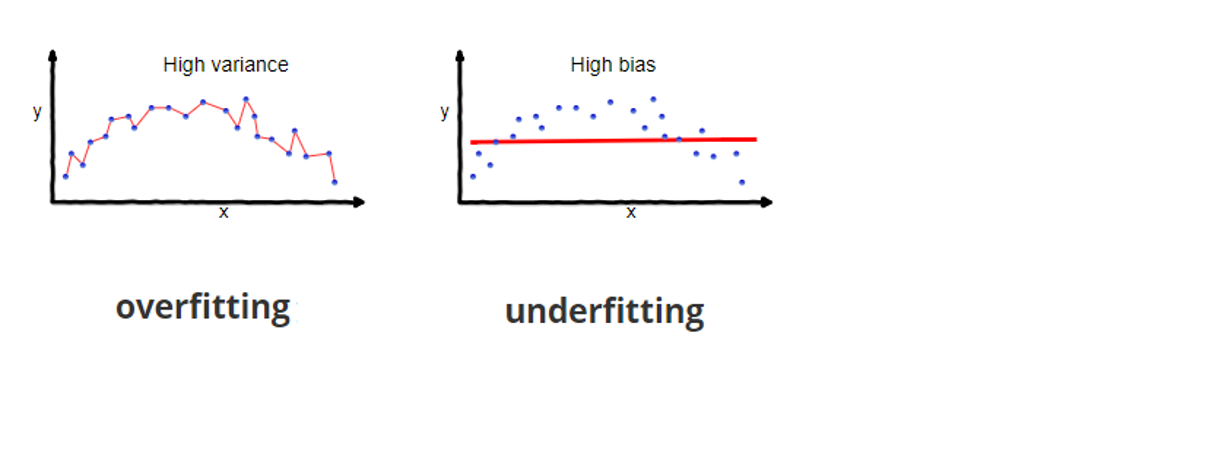

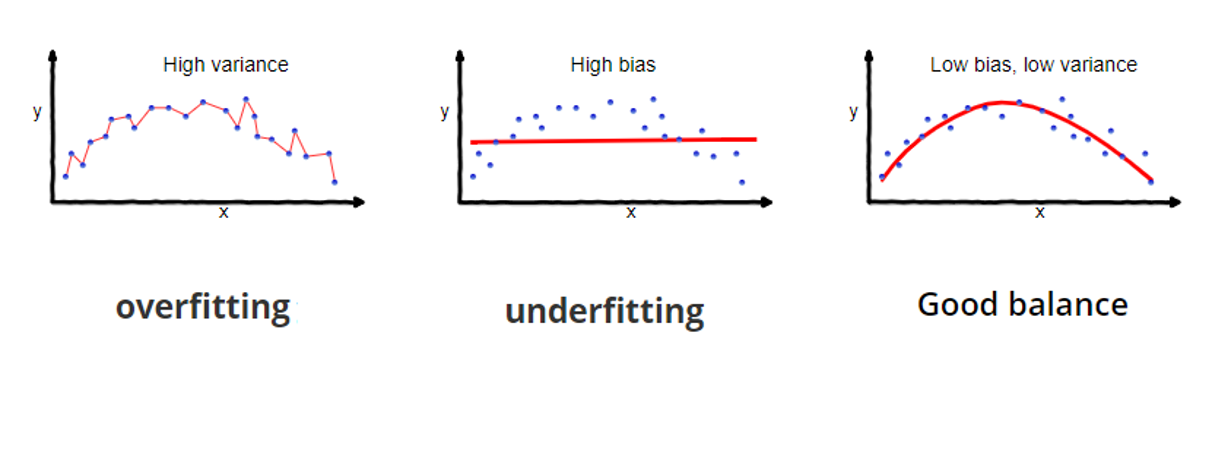

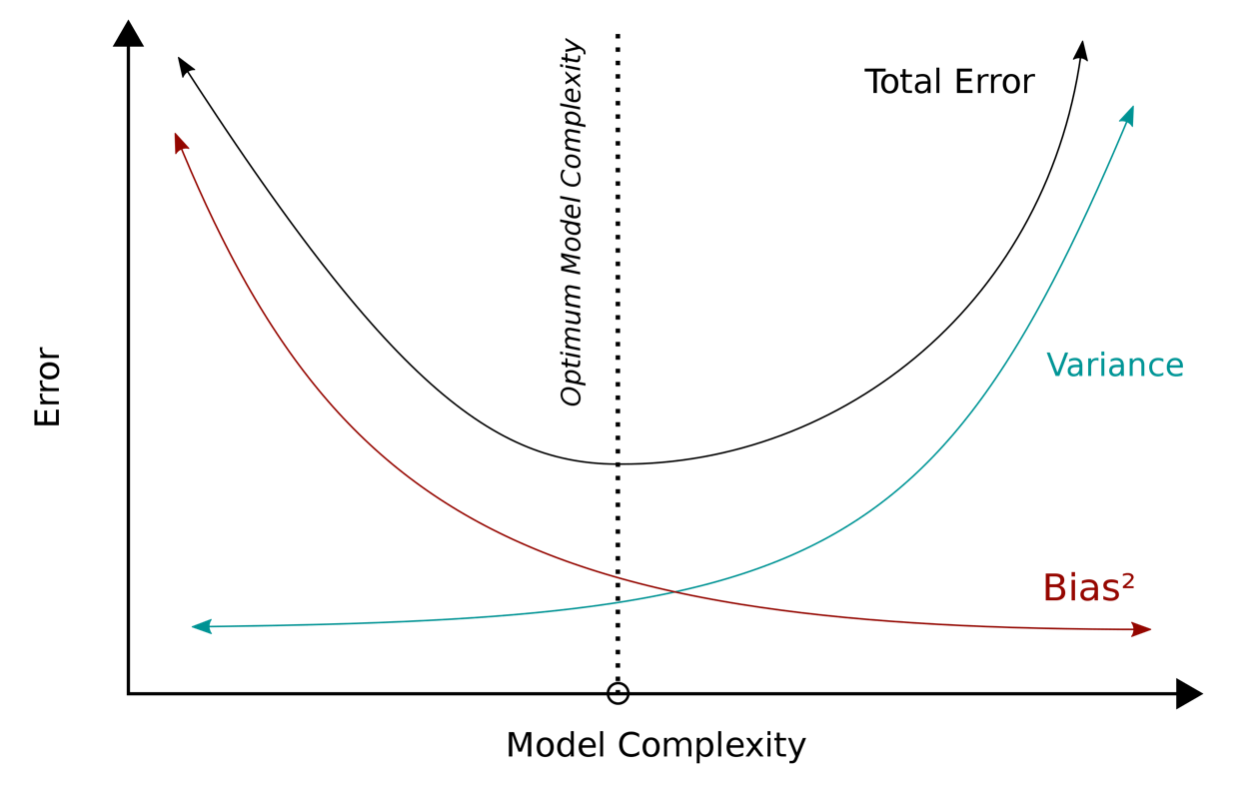

ML models can fit more functions but also more likely to overfit

Should pick the ‘right’ amount of model complexity

What is a large-language model like chatGPT?

What is a large-language model like chatGPT?

What is chatGPT?

a stochastic auto-regressive next-word predictor with a chatbot interface

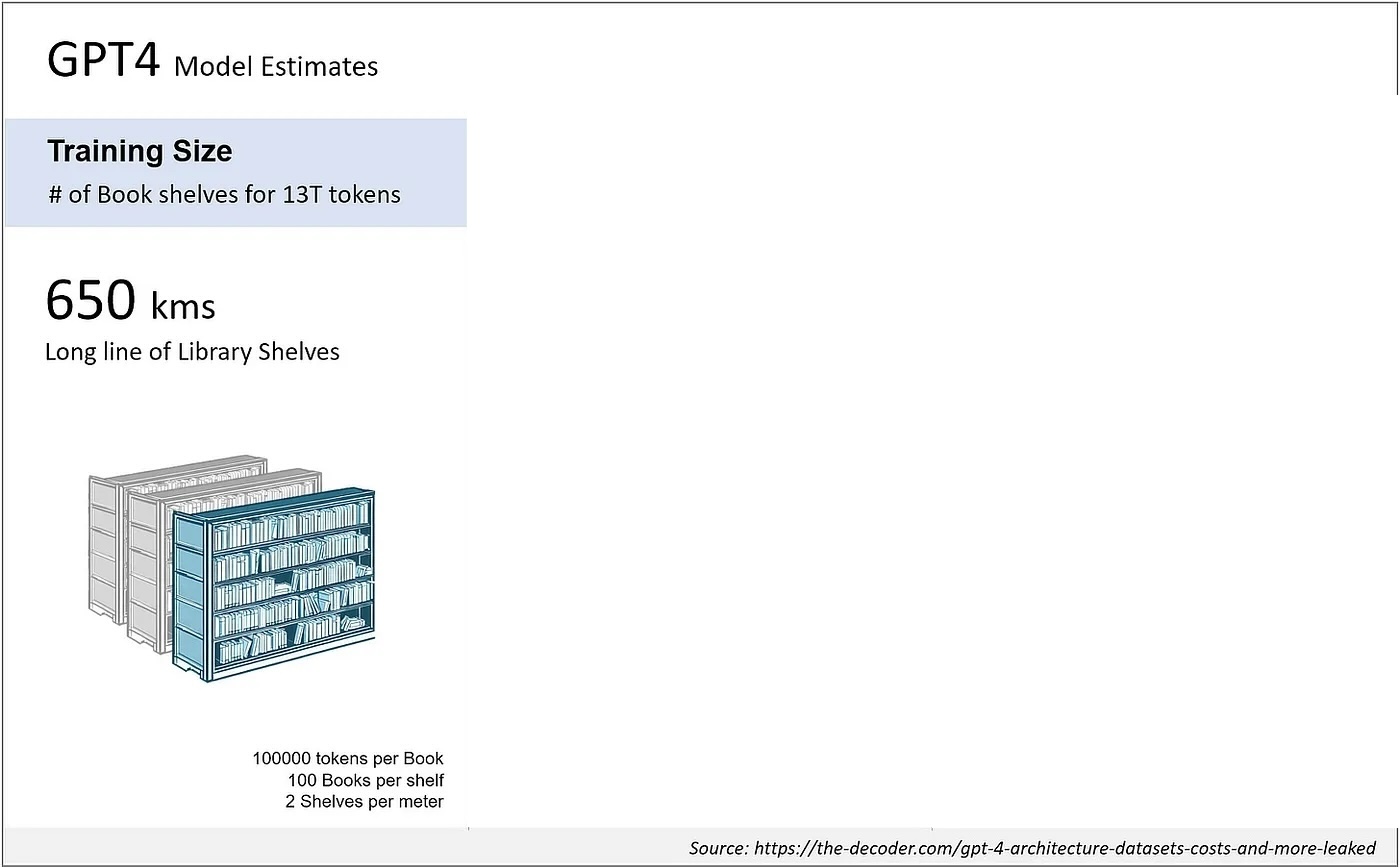

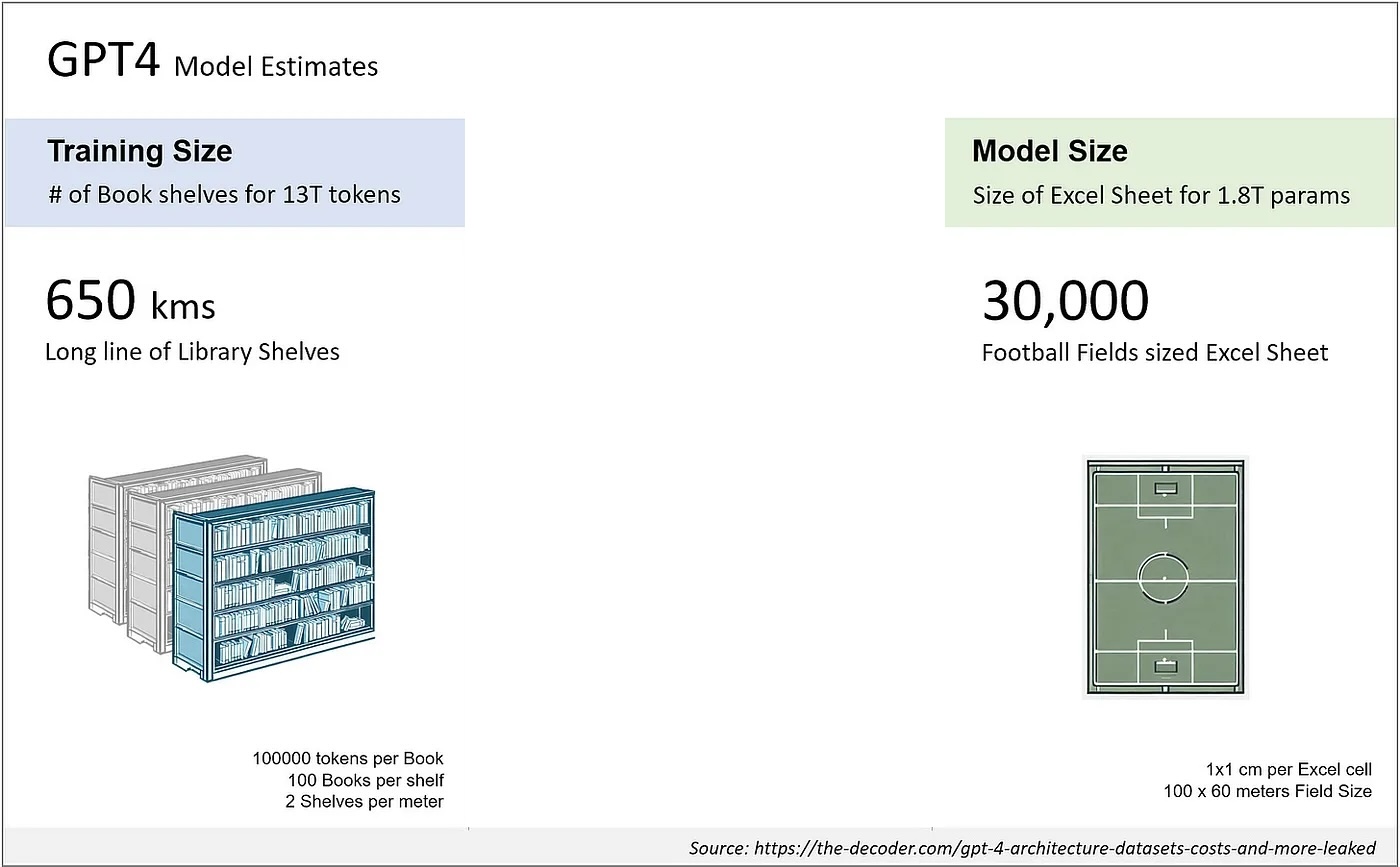

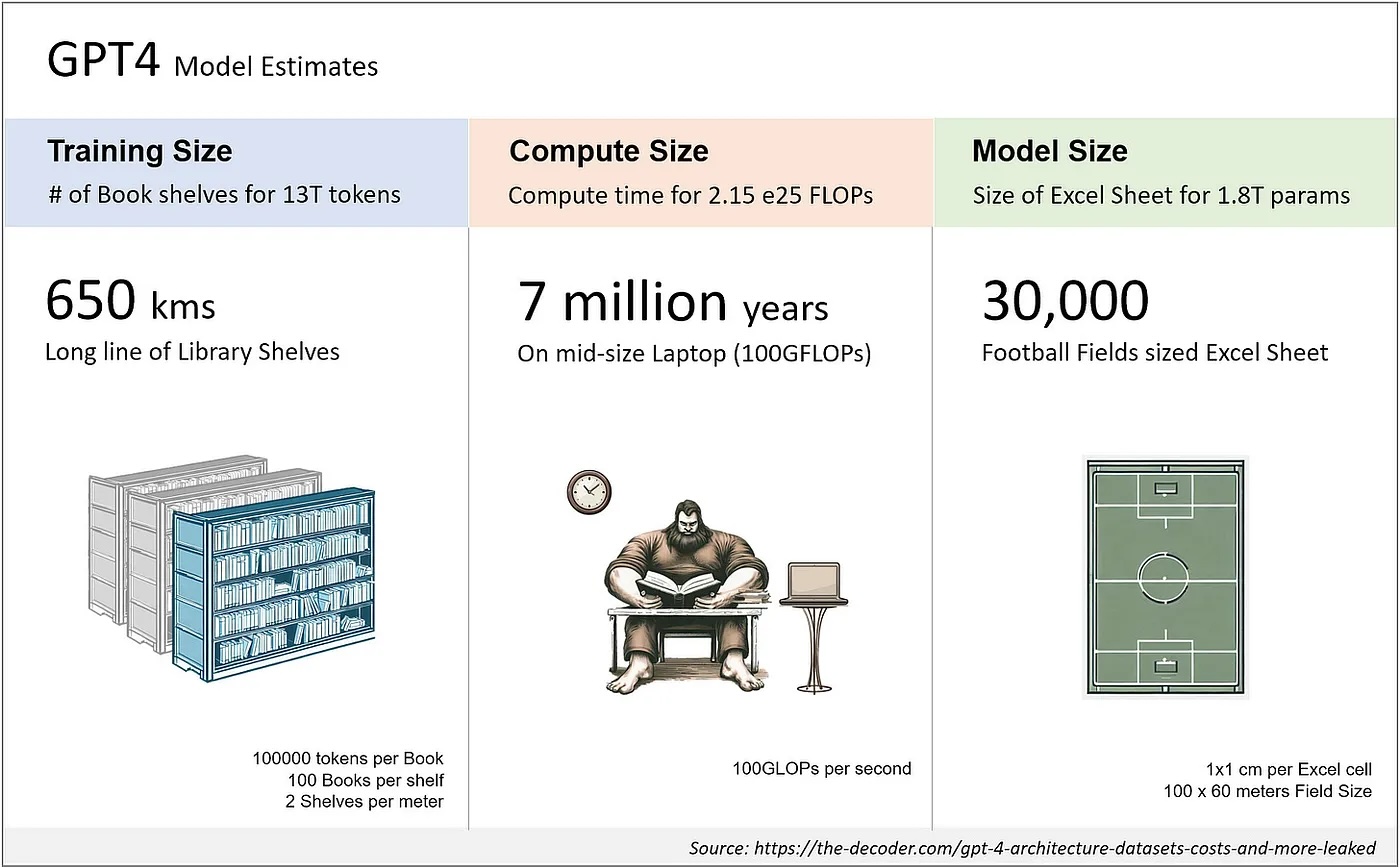

- trained by predicting the next <…>

- in a large corpus of text

- with a large model

- for a long time on expensive hardware

auto-regressive conditional generation:

\[\begin{align} \text{word}_1 &\sim p_{\text{chatGPT}}(\text{word}|\text{prompt})\\ \end{align}\]

auto-regressive conditional generation:

\[\begin{align} \text{word}_1 &\sim p_{\text{chatGPT}}(\text{word}|\text{prompt})\\ \text{word}_2 &\sim p_{\text{chatGPT}}(\text{word}|\text{word}_1,\text{prompt}) \end{align}\]

auto-regressive conditional generation:

\[\begin{align} \text{word}_1 &\sim p_{\text{chatGPT}}(\text{word}|\text{prompt})\\ \text{word}_2 &\sim p_{\text{chatGPT}}(\text{word}|\text{word}_1,\text{prompt}) \end{align}\]

auto-regressive conditional generation:

\[\begin{align} \text{word}_1 &\sim p_{\text{chatGPT}}(\text{word}|\text{prompt})\\ \text{word}_2 &\sim p_{\text{chatGPT}}(\text{word}|\text{word}_1,\text{prompt})\\ \text{word}_n &\sim p_{\text{chatGPT}}(\text{word}|\text{word}_{n-1},\ldots,\text{word}_1,\text{prompt}) \end{align}\]

auto-regressive conditional generation:

\[\begin{align} \text{word}_1 &\sim p_{\text{chatGPT}}(\text{word}|\text{prompt})\\ \text{word}_2 &\sim p_{\text{chatGPT}}(\text{word}|\text{word}_1,\text{prompt})\\ \text{word}_n &\sim p_{\text{chatGPT}}(\text{word}|\text{word}_{n-1},\ldots,\text{word}_1,\text{prompt})\\ \text{STOP} &\sim p_{\text{chatGPT}}(\text{word}|\text{word}_{n-1},\ldots,\text{word}_1,\text{prompt}) \end{align}\]

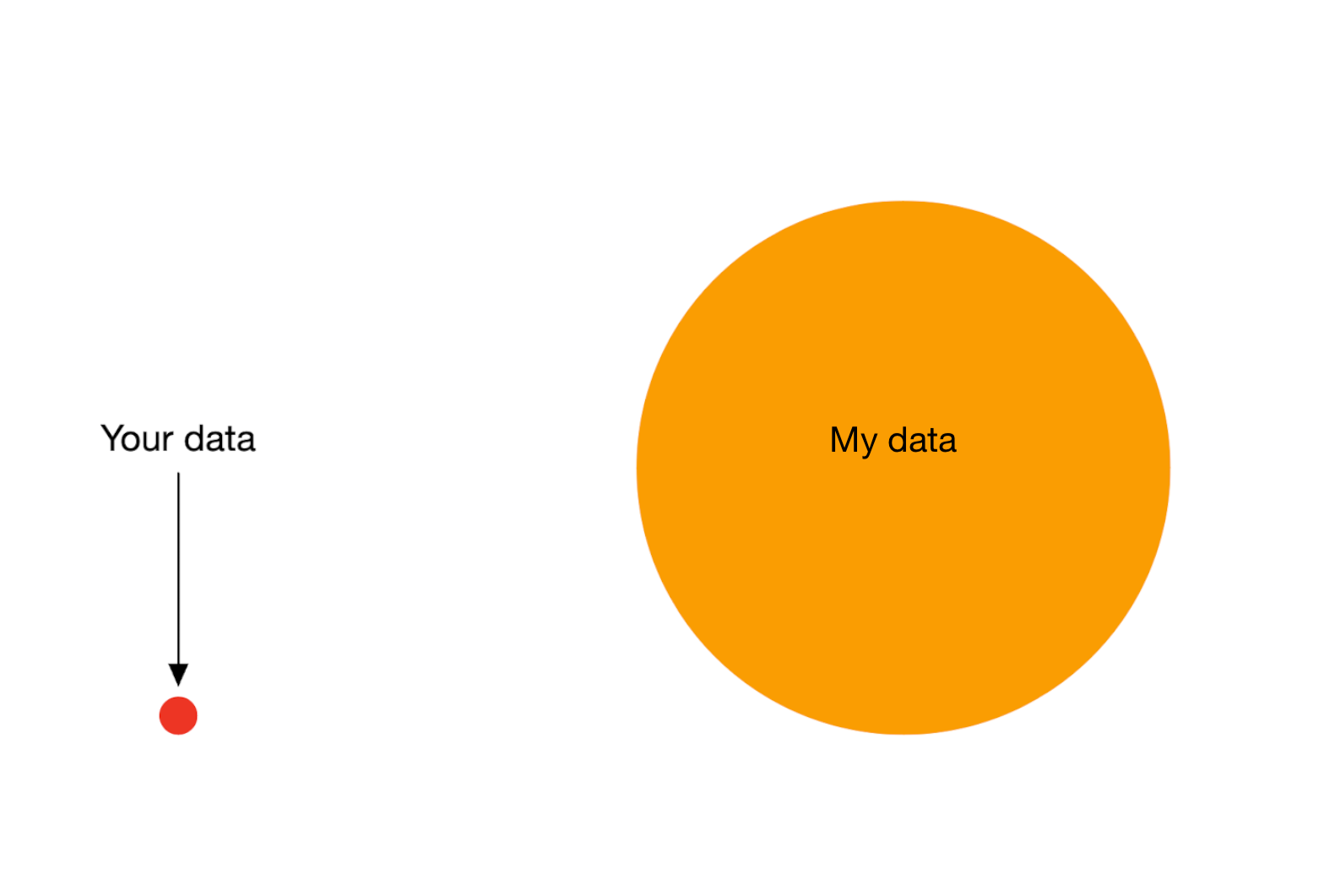

GPT-4 scale

rule-based vs LLMs

- deduction from explicit knowledge

- knowledge verifiable and fast

- constrained to deducible

- extracted from observed data

- unverifiable and compute intensive

- “chatGPT seems to know(?) much”

Using ML in research

ML versus statistics, when to use what

machine learning

- have more data

- more complex functions (images)

statistics (e.g. GLMs)

- less data

- more domain knowledge

A sobering note

- ML in medicine has been ‘hot’ since at least the 90s (Cooper et al. 1997)

- not much evidence that it outperforms regression on most tasks (Christodoulou et al. 2019)

- though many poorly performed studies (Dhiman et al. 2022)

Safe use of AI models in medical practice

two questions

Question 1

- prediction model of \(Y|X\) fits the data really well (AUC = 0.99 and perfect calibration)

- will changing \(X\) induce a change \(Y\)?

Question 2

- Give statins when risk of cardiovascular event in 10 years exceeds 10%

- ML model based on age, medication history, cardiac CT-scan predicts this very well

- will using this model for treatment decisions improve patient outcomes?

Improving the world is a causal task

- statistics / ML: what to expect when we passively observe the world

- not how we can intervene to make things better, this requires causality

- Question 1

- yellowish fingers predict lung cancer, paint fingers to skin color?

- weight loss predicts death in lung cancer, send patients to couch with McDonalds?

![]()

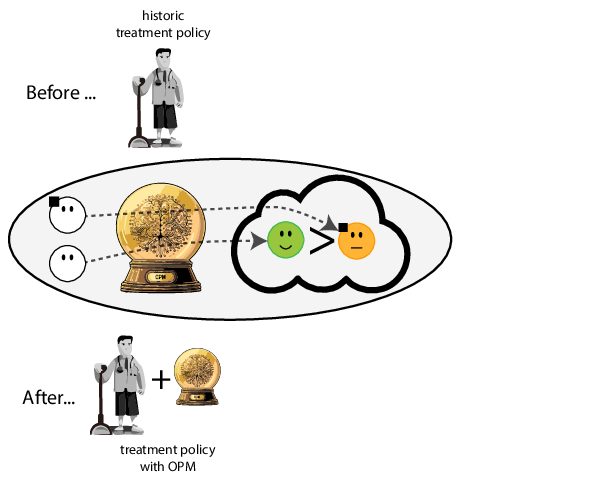

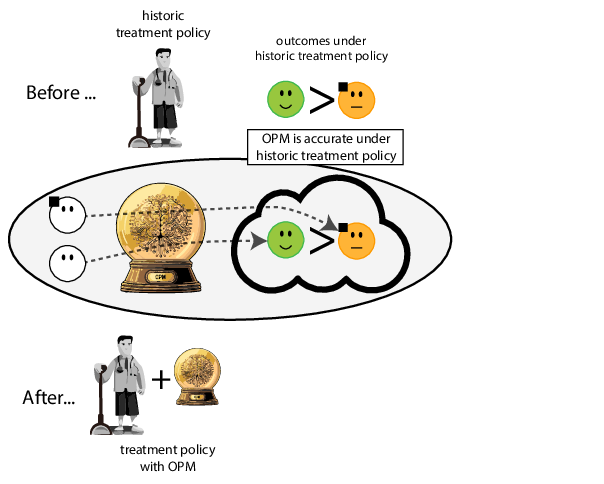

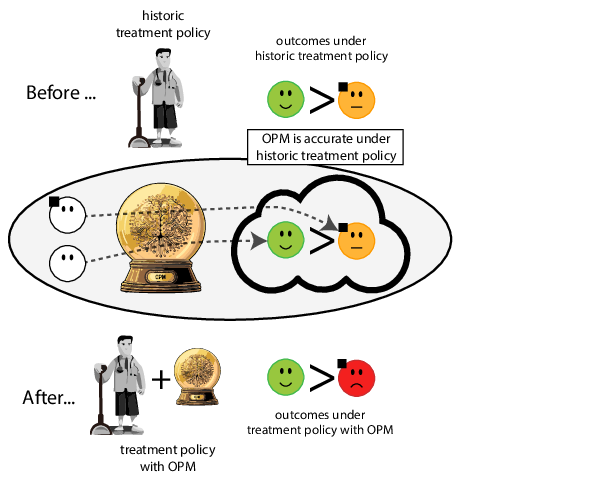

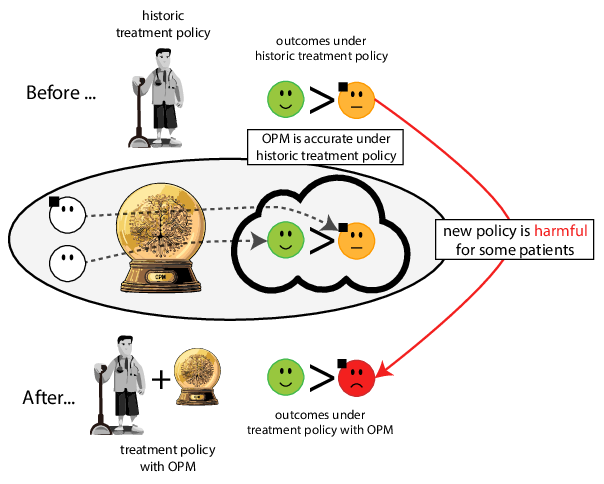

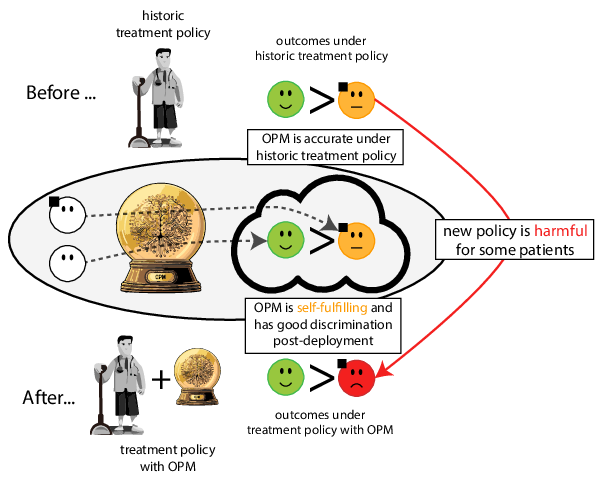

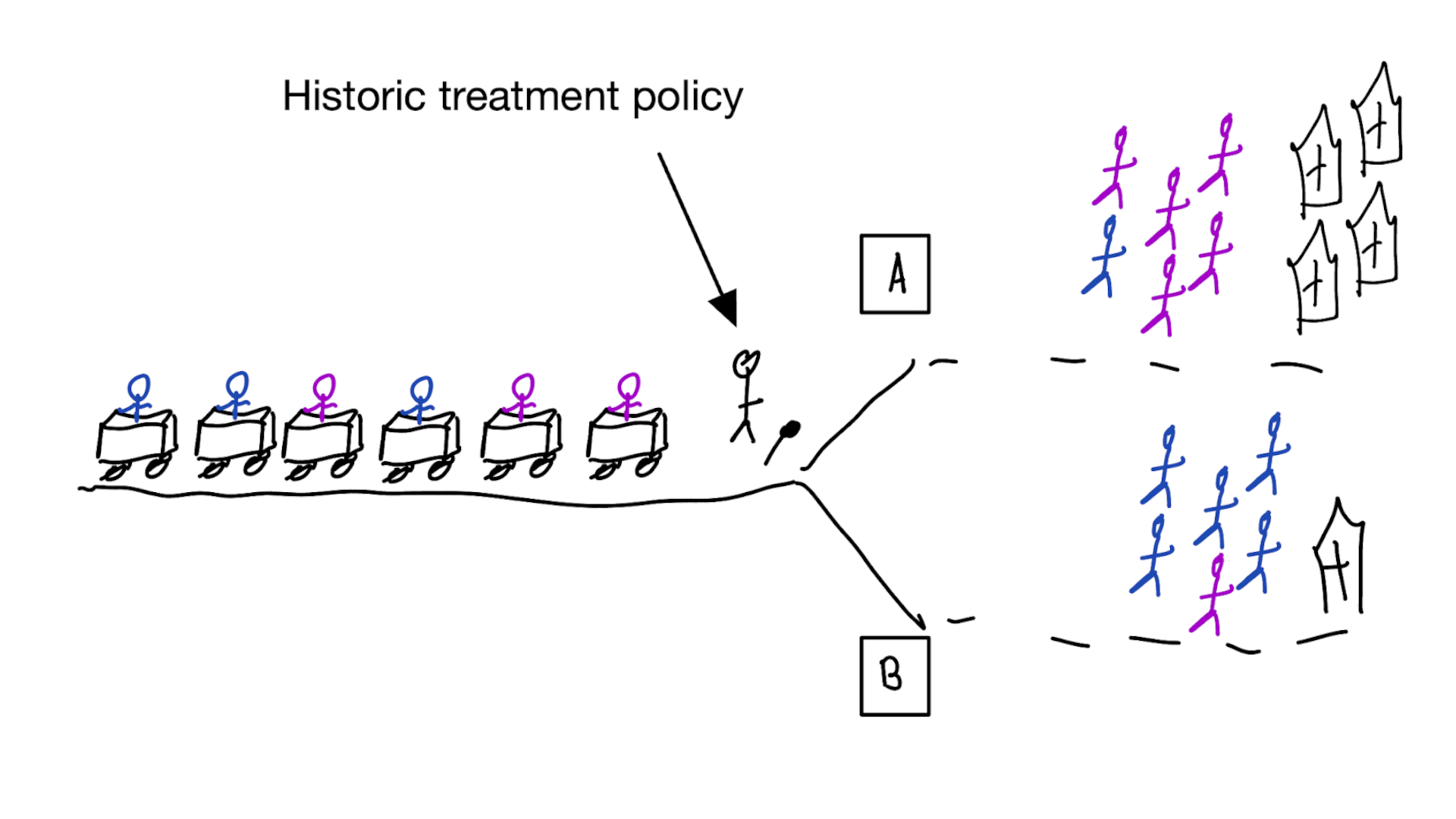

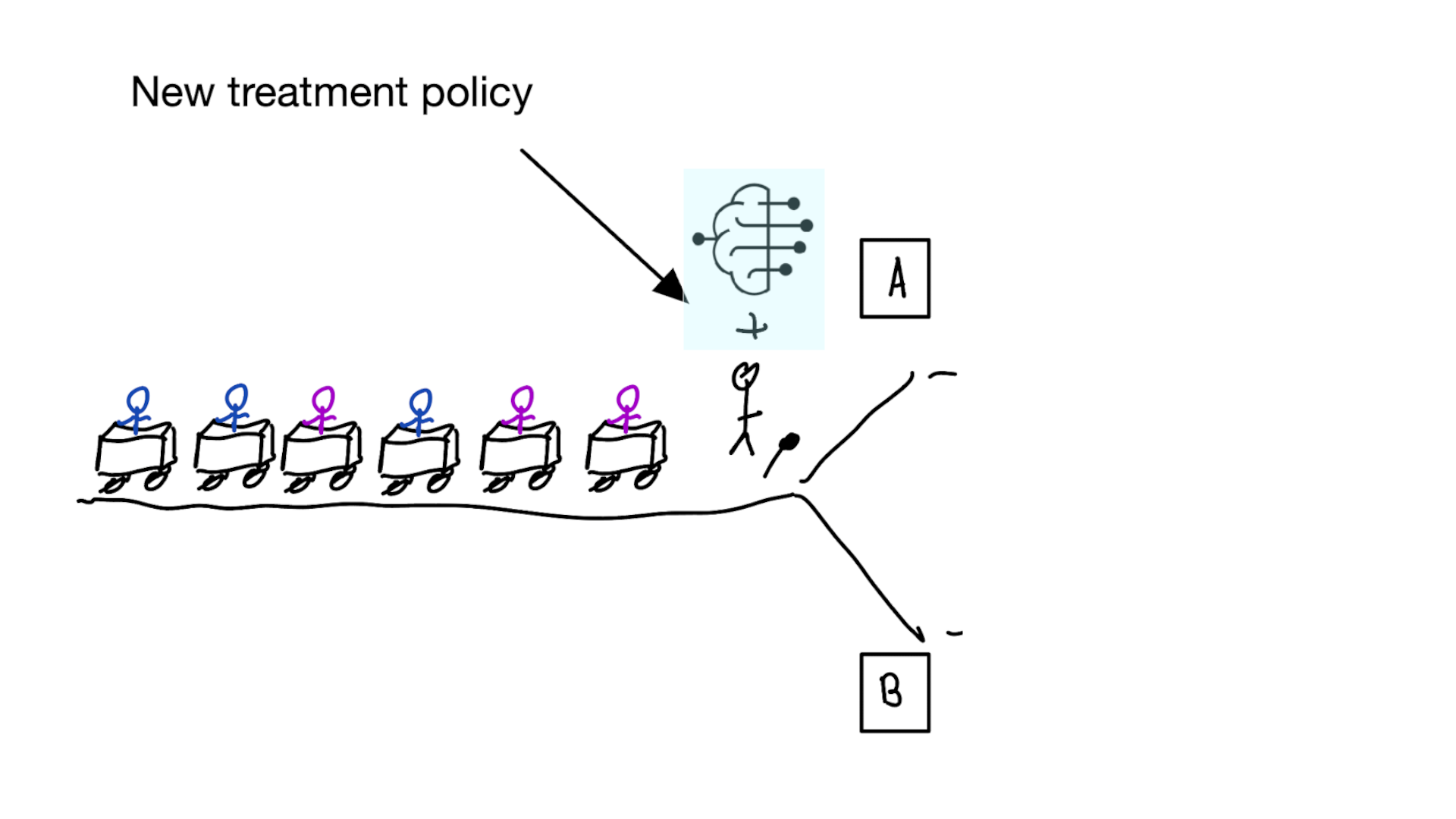

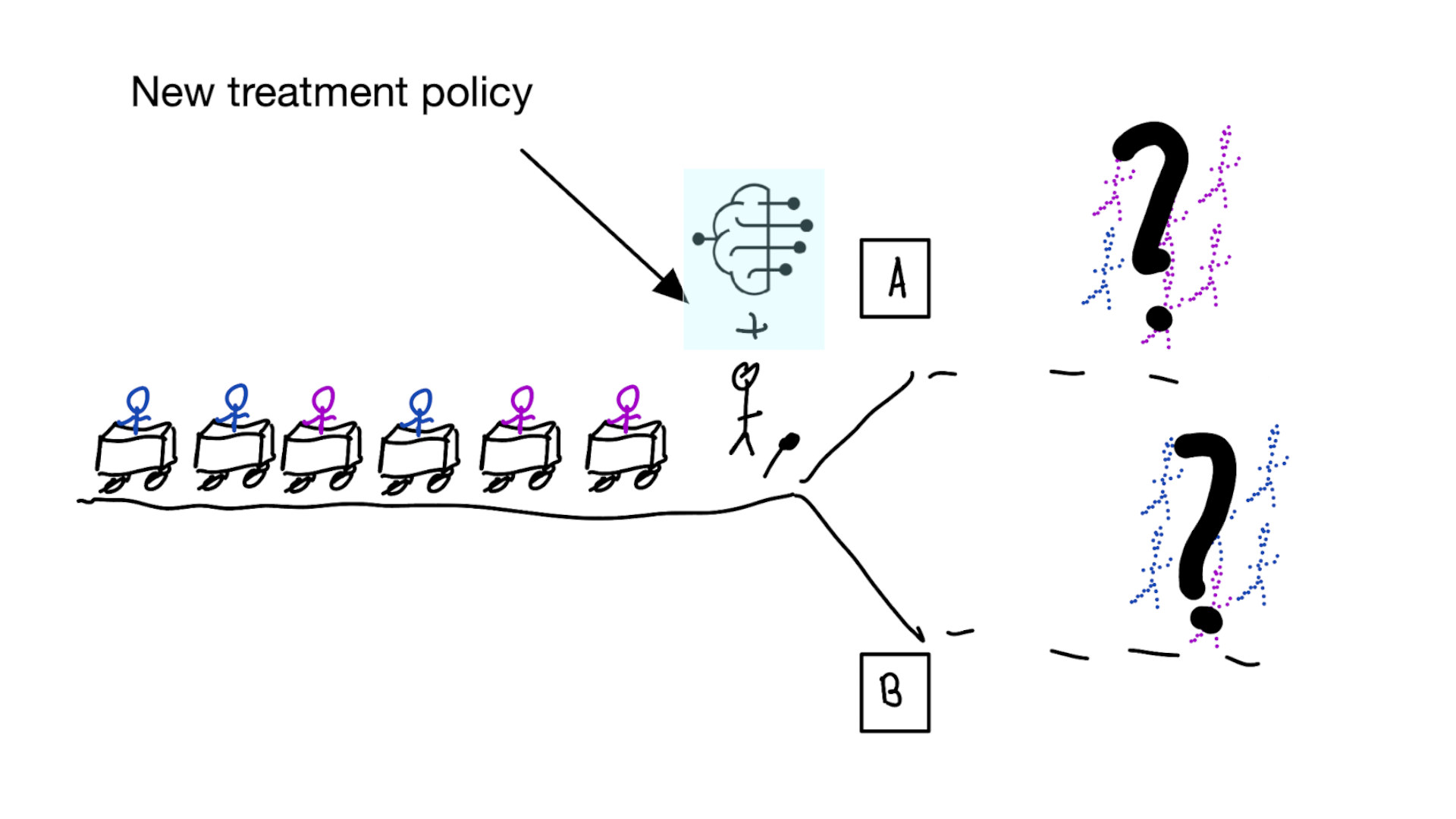

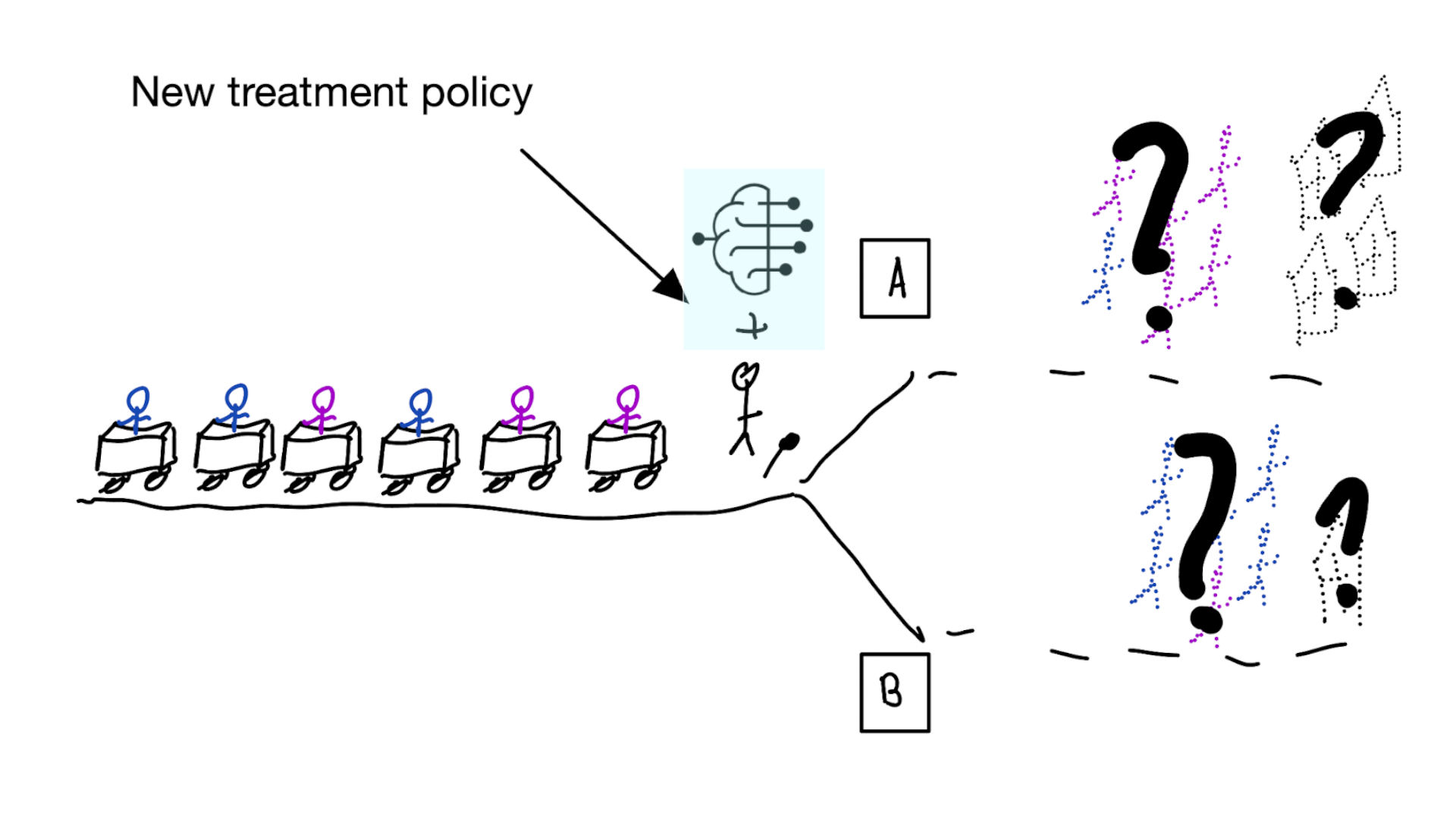

When accurate prediction models yield harmful self-fulfilling prophecies

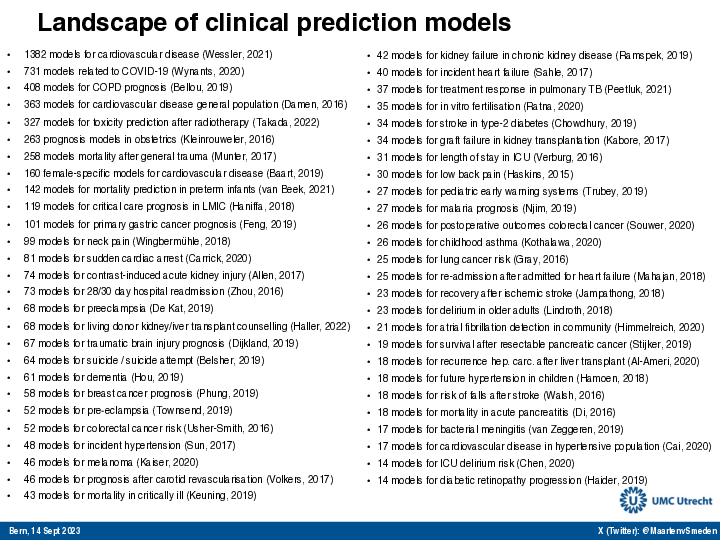

Prediction modeling is very popular in medical research

Tip

building models for decision support without regards for the historic treatment policy is a bad idea

Note

The question is not “is my model accurate before / after deploytment”, but did deploying the model improve patient outcomes?

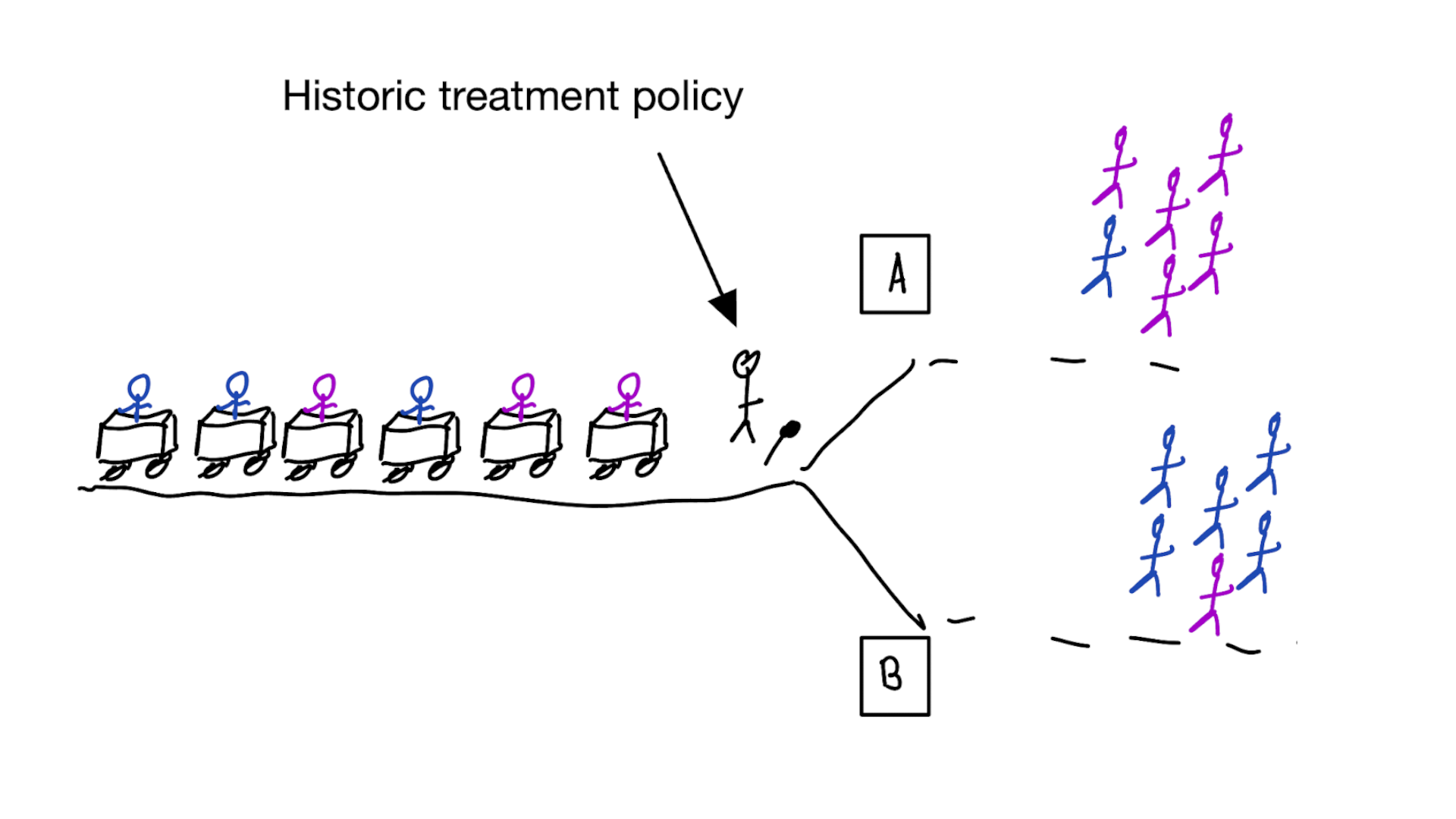

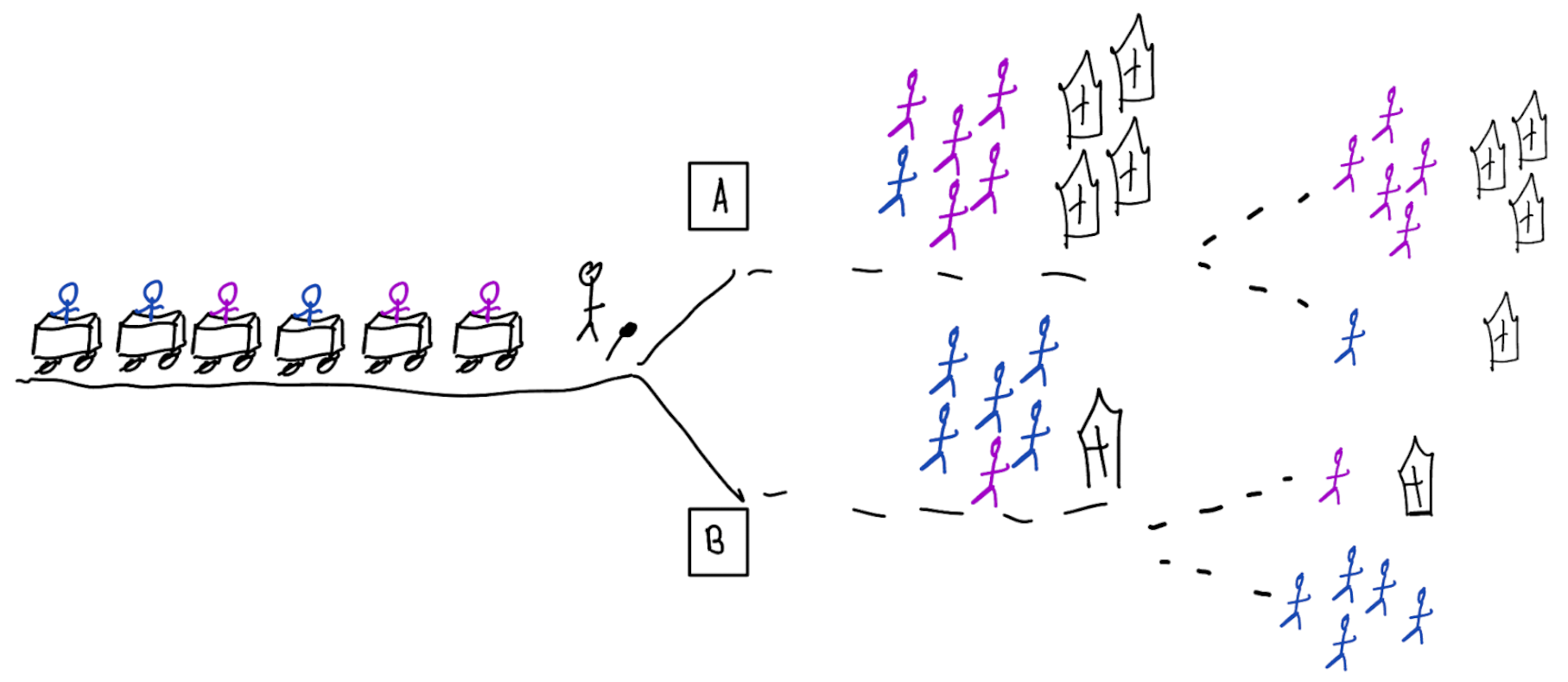

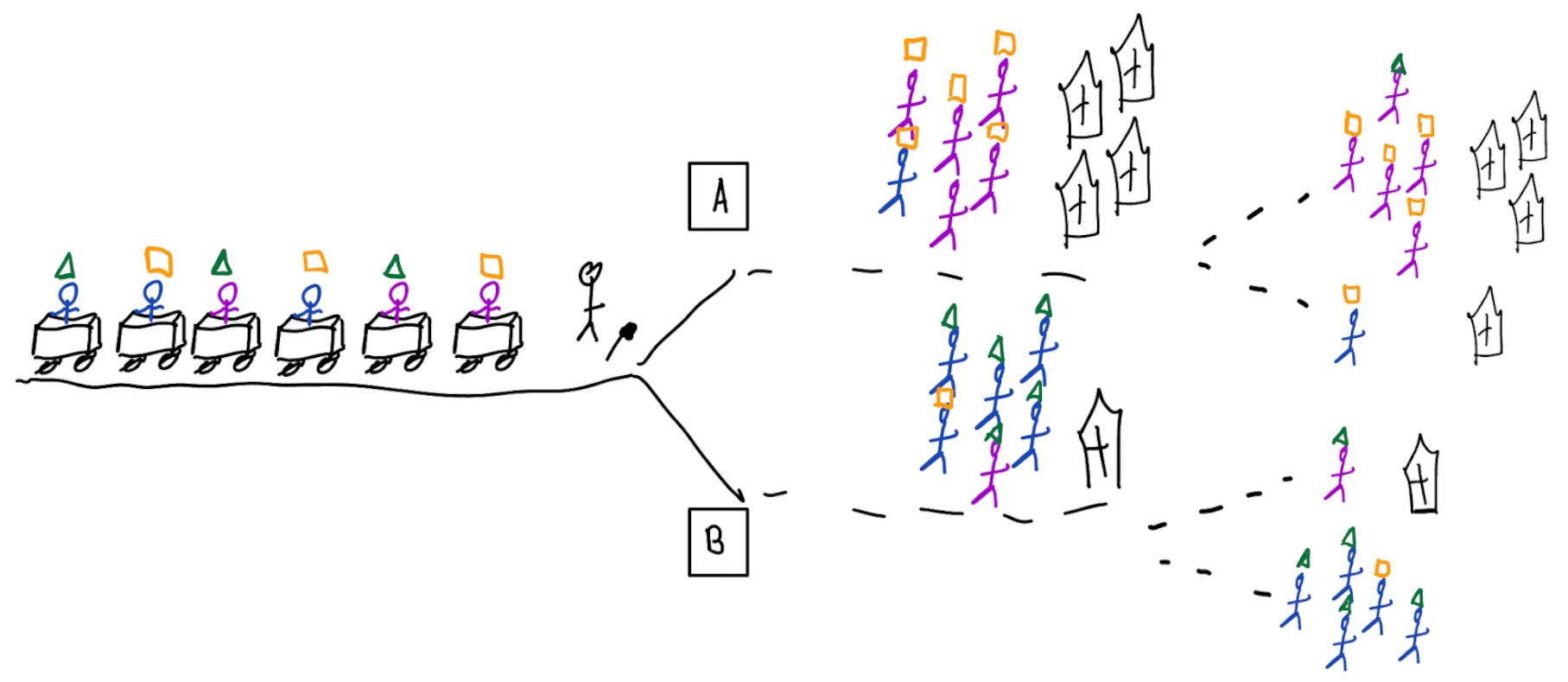

Treatment-naive risk models

Is this obvious?

Tip

It may seem obvious that you should not ignore historical treatments in your prediction models, if you want to improve treatment decisions, but many of these models are published daily, and some guidelines even allow for implementing these models based on predictve performance only

Other risk models:

- condition on given treatment and traits

- unobserved confounding (hat type) leads to wrong treatment decisions

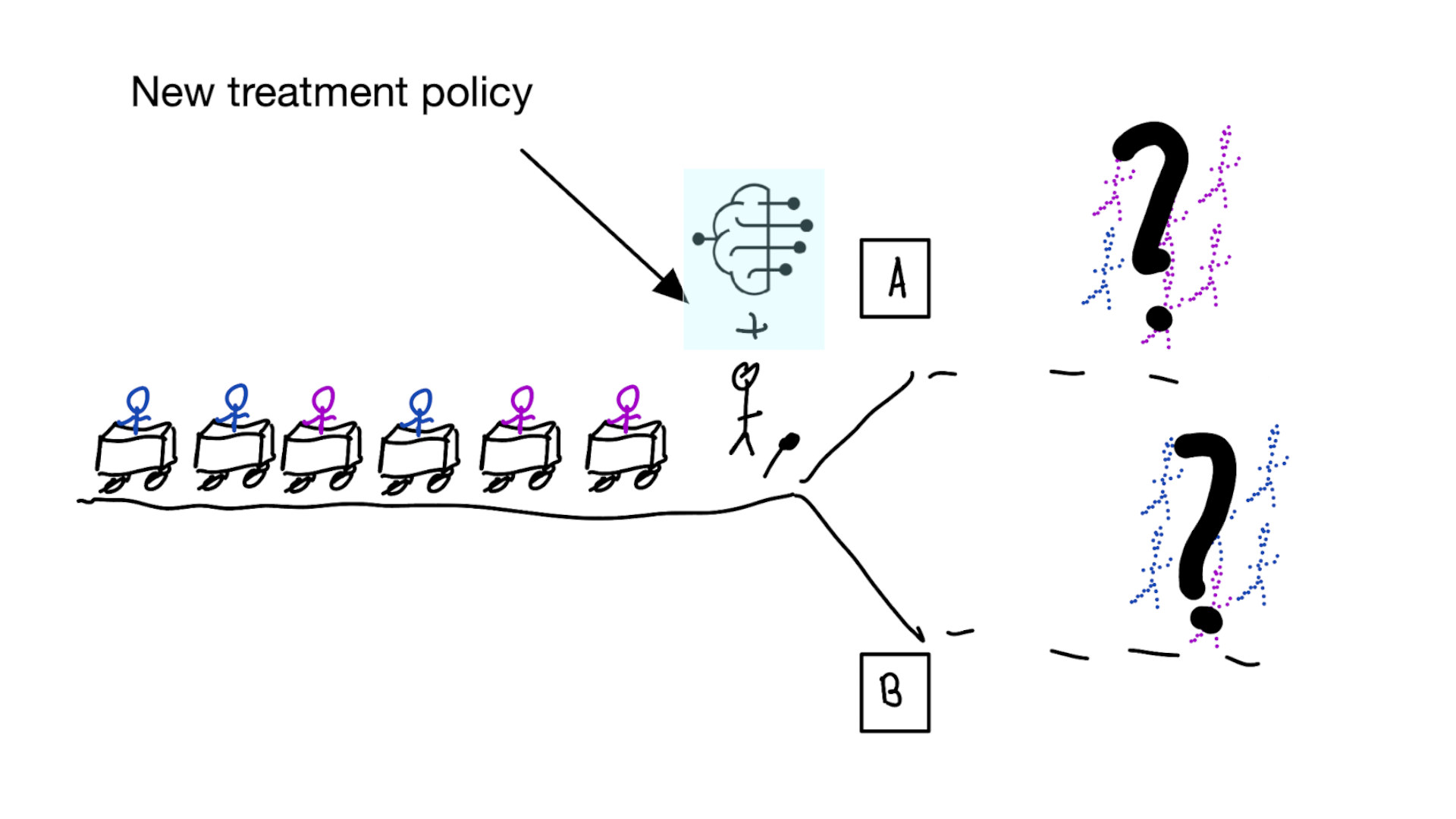

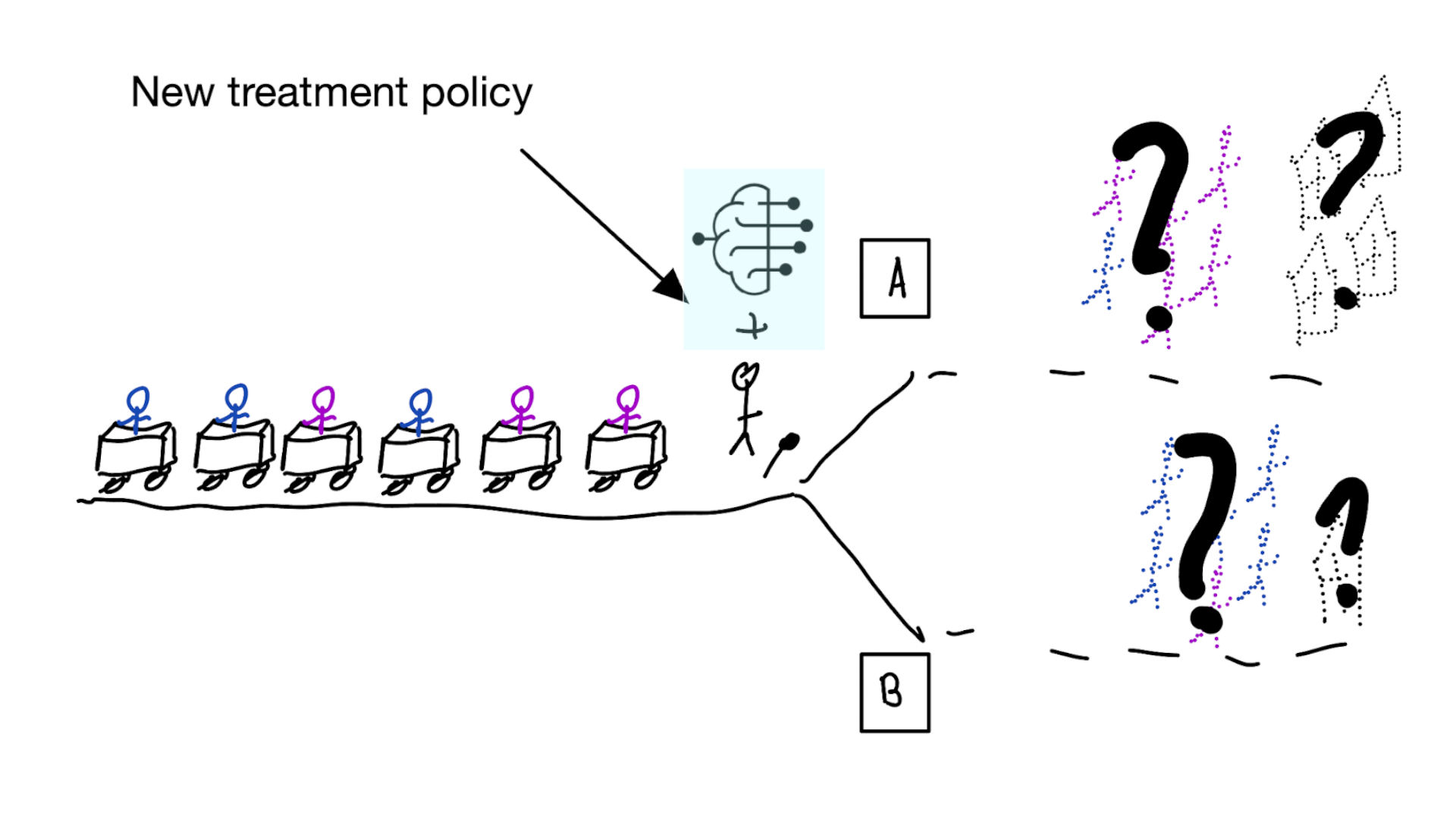

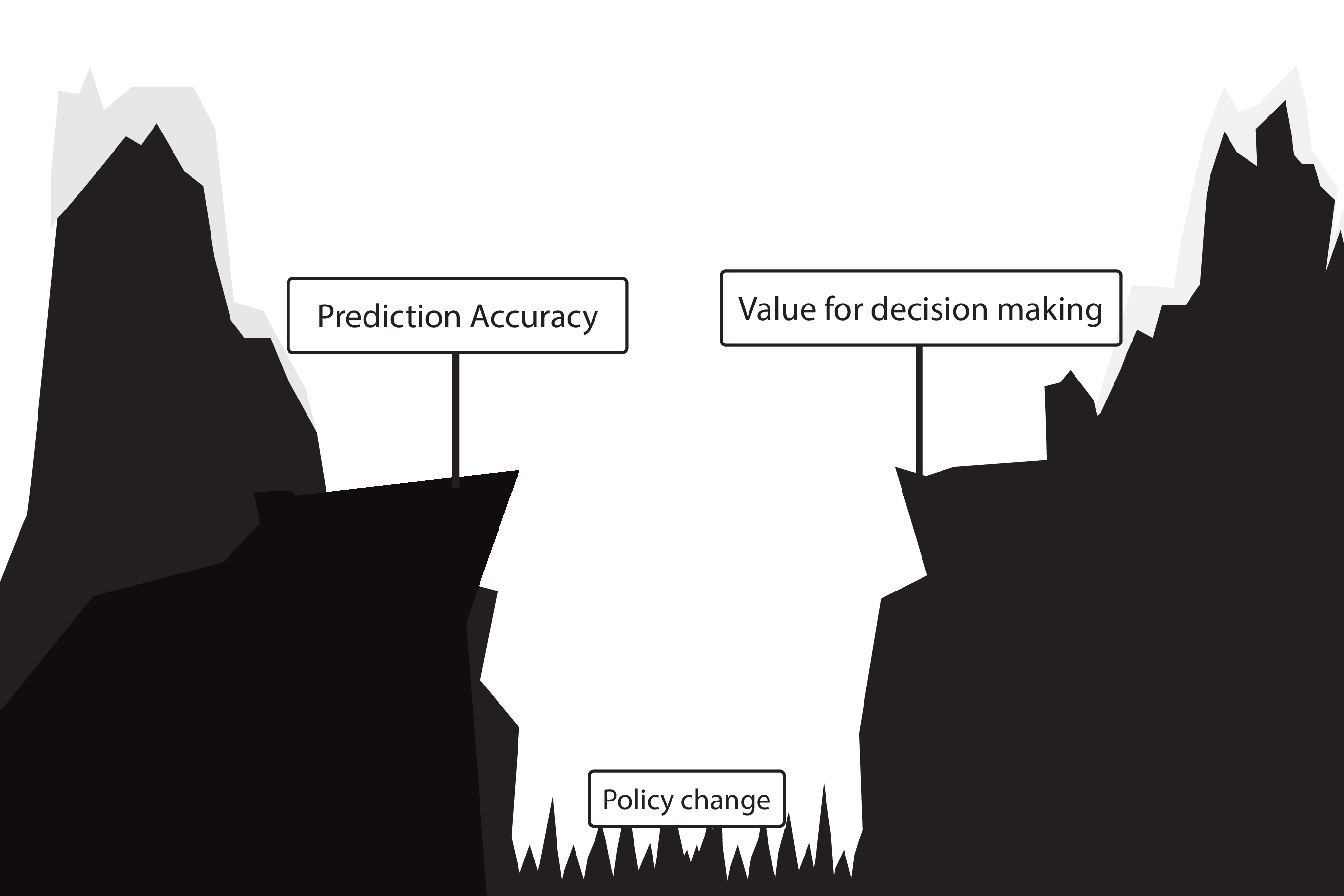

Recommended validation practices do not protect against harm

because they do not evaluate the policy change

Bigger data does not protect against harmful risk models

More flexible models do not protect against harmful risk models

Gap between prediction accuracy and value for decision making

What to do?

What to do?

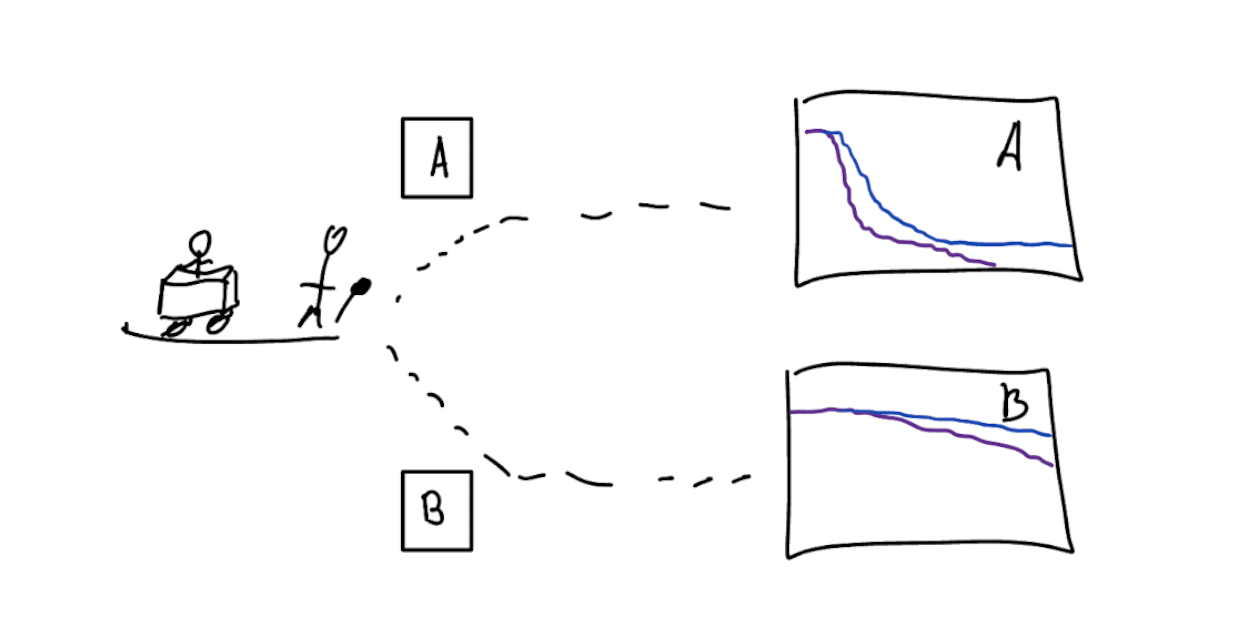

Evaluate policy change (cluster randomized controlled trial)

Build models that are likely to have value for decision making

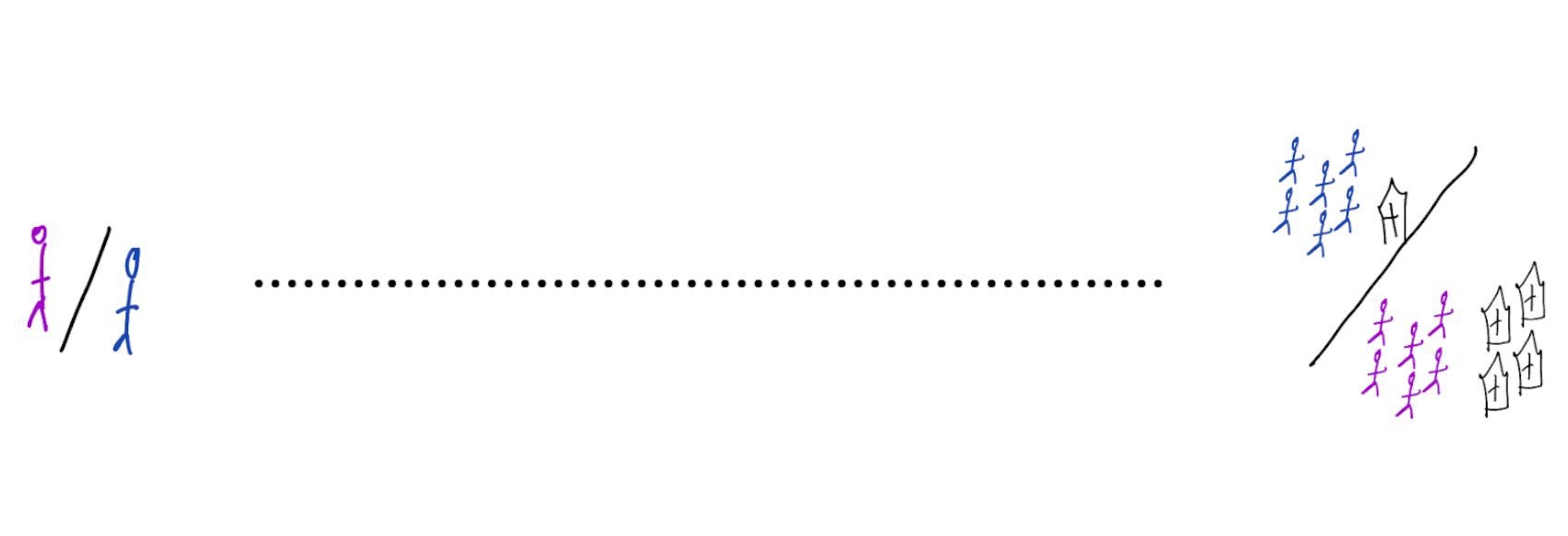

Prediction-under-intervention models

Predict outcome under hypothetical intervention of giving certain treatment

When developing risk models,

always discuss:

1. what is effect on treatment policy?

2. what is effect on patient outcomes?

Don’t assume predicting well leads to good decisions

think about the policy change

From algorithms to action: improving patient care requires causality (Amsterdam, Jong, et al. 2024)

When accurate prediction models yield harmful sel-fulfilling prophecies (Amsterdam, Geloven, et al. 2024)

take-aways

- AI subsumes rule-based programs and machine learning

- machine learning is statistical learning from data with flexible models

- chatGPT does auto-regressive next-word prediction

- chatGPT produces beautiful mistakes: eloquently written logical fallacies

- prediction: what to expect when passively observing the world

- causality: what happens when I change something?

- prediction models can cause harmful self-fulfilling prophecies when used for decision making

- when building prediction models for decision support, you cannot ignore decisions on the treatments in historic data

- models for prediction-under-intervention have foreseeable effects when used for decision making

- ultimate test of model utility is determined by outcomes in (cluster) RCT

thank you

©Wouter van Amsterdam — WvanAmsterdam — wvanamsterdam.com/talks